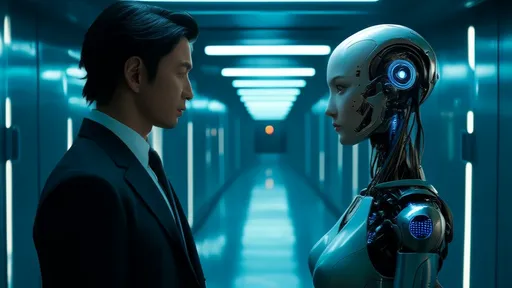

The digital landscape is currently grappling with a profound and unsettling reality: the rapid advancement of artificial intelligence is being shadowed by an alarming surge in its misuse. What was once the domain of science fiction and academic debate has erupted into a pressing global concern, dominating headlines and policy discussions from Silicon Valley to Brussels. The very tools heralded as the engines of a new technological renaissance are being weaponized, manipulated, and exploited, forcing a stark reevaluation of our relationship with intelligent machines.

This is not a distant, hypothetical threat. The evidence of abuse is already woven into the fabric of our daily digital interactions. Sophisticated phishing campaigns, powered by AI that can mimic human writing styles and vocal patterns with terrifying accuracy, have become exponentially more effective, duping individuals and compromising corporate networks. Deepfake technology, once a novel parlor trick, is now a potent tool for disinformation, creating convincing videos of public figures saying things they never did, threatening to destabilize political processes and erode public trust in media.

Beyond the political sphere, the harm is deeply personal. AI-driven surveillance systems are being deployed by authoritarian regimes to enable unprecedented social control and suppress dissent, tracking citizens' movements and monitoring their communications. In the financial world, algorithmic systems can be designed to execute complex fraud or manipulate markets at speeds incomprehensible to human regulators. The architecture of these systems, often opaque even to their creators, creates a veil behind which malicious activity can flourish.

The escalating frequency and sophistication of these incidents have triggered a seismic shift in public and governmental sentiment. The initial wave of unbridled optimism about AI's potential is now tempered by a growing sense of caution, even apprehension. A chorus of voices from academia, civil society, and within the tech industry itself is rising, demanding action. The call for robust, forward-thinking regulation is no longer a whisper from the fringe; it has become a central plank in policy platforms worldwide.

This burgeoning movement for oversight argues that the current legal and ethical frameworks are woefully inadequate for the challenges posed by modern AI. Existing laws were drafted for a different technological era and are struggling to keep pace with systems that can learn, adapt, and operate autonomously. The core of the debate centers on a critical balancing act: how to craft regulations that effectively curb misuse and protect citizens without stifling the innovation that drives economic growth and solves complex problems.

Proposed regulatory measures are diverse and complex. Some advocate for strict licensing requirements for the development of high-risk AI applications, particularly in fields like facial recognition and autonomous weapons. Others push for stringent transparency and accountability mandates, forcing companies to document their AI's decision-making processes and undergo rigorous third-party audits. There is also a powerful argument for establishing clear legal liability, determining who is at fault—the developer, the user, or the entity deploying the AI—when an autonomous system causes harm.

Internationally, the response is fragmented but gaining momentum. The European Union is at the forefront with its ambitious Artificial Intelligence Act, which proposes a risk-based regulatory framework that would outright ban certain applications deemed unacceptable. In the United States, the policy conversation is more fractured, with a mix of proposed federal legislation and state-level initiatives beginning to take shape. Meanwhile, global bodies like the OECD and UNESCO are working to establish international norms and principles for the ethical development of AI, recognizing that a patchwork of national laws is insufficient for a borderless technology.

However, the path to effective regulation is fraught with immense challenges. The breakneck speed of AI innovation means that any specific law risks being obsolete by the time it is enacted. Defining key concepts like "bias," "fairness," and even "intelligence" in a legal context is a philosophical and technical minefield. Furthermore, there is a palpable fear, particularly within the tech sector, that heavy-handed regulation could cement the advantage of large, established corporations with the resources to comply, while crushing startups and smothering the open-source collaboration that has fueled much of AI's progress.

Yet, the cost of inaction is increasingly viewed as unacceptable. The potential for AI to exacerbate inequality, undermine democratic institutions, and infringe upon fundamental human rights is too great to ignore. The debate has moved beyond abstract ethical principles into the concrete realm of risk management and harm prevention. The question is no longer if we should regulate artificial intelligence, but how we can do so wisely, effectively, and with a common purpose.

The coming years will be a defining period for the future of technology and society. The decisions made by policymakers, technologists, and citizens today will shape the AI landscape for generations. Navigating this complex terrain requires a nuanced, collaborative, and urgent effort to build guardrails that ensure artificial intelligence serves as a force for empowerment and progress, rather than a tool for deception, control, and harm. The world is watching, and the time for action is now.

By /Aug 27, 2025

By /Aug 27, 2025

By /Aug 27, 2025

By /Aug 27, 2025

By /Sep 12, 2025

By /Aug 27, 2025

By /Sep 12, 2025

By /Aug 27, 2025

By /Aug 27, 2025

By /Sep 12, 2025

By /Sep 12, 2025

By /Sep 12, 2025

By /Aug 27, 2025

By /Sep 12, 2025

By /Sep 12, 2025

By /Sep 12, 2025

By /Aug 27, 2025

By /Sep 12, 2025

By /Sep 12, 2025

By /Aug 27, 2025